- Describe Fitt's law in words. What three quantities does it relate?

[3]

The time to acquire a target is a (logarithmic) function

of (the ratio of) the distance and the size of the target.

- The user's mouse cursor is somewhere roughly in the centre third of

the screen. List in order the top five easiest locations on the screen

for the user to access using the mouse, and explain why. (Your answer

of the locations is not as important as your explanation of why.)

[4]

(1) Right under the cursor: no motion required.

(2) Bottom-right corner of the screen: Fitt's law, corners are like

infinitely large targets. Pushing is easier for the arm than pulling;

the bottom-right corner is an arc away from the body for a right-handed

user.

(3) Top-left corner: arc toward the body

(4) Top-right

(5) Bottom-left

- Name at least three of the groundbreaking innovations in

Engelbart's NLS prototype demonstration in 1968. [3]

mouse, windowing system, hyperlinks, chording keyboard,

collaborative work (email, IM, video conf)

- What was one of the most important advances in the introduction of

the Apple Macintosh in 1984? (There's room for debate here; argue

your case.)[3]

Computers for the masses: much cheaper ($3k rather than

over $10k), mass-marketing ad campaign including TV spots during

Superbowl and Olympics.

- What are events and callbacks?

Describe an example. [4]

An event is an action, usually triggered by the user: e.g., clicking on a

button, selecting a menu item, pressing Enter, moving the mouse. A callback

is a procedure/function

invoked when a corresponding event happens. For example, the exit() function

might be run when the user clicks on a "Quit" button.

- The CubeView tutorial example in Lab0 had three C++ files:

CubeView.cxx, CubeMain.cxx, and CubeViewUI.cxx. Why have three files?

Contrast their purposes. [3]

CubeMain was very small, just had the main() function and kicked off an

instance of CubeViewUI. CubeViewUI was generated by fluid, and just contained

the user interface widgets. The primary functionality was in CubeView.cxx, in

a class that was used/instantiated by CubeViewUI.

- Describe a complete human-computer interface other than our standard

keyboard, monitor, mouse/touchpad/etc. Describe an application for which this

interface might be better-suited than our traditional interface. [4]

- Describe the vonNeumann model of computing. [3]

A computer processes input data according to input

instructions, and produces output results.

The computer's memory can store both the input data as well as the instructions.

- Describe Flynn's taxonomy of parallel computing.

(Give more detail than just expanding the acronyms!) [4]

SISD: single instruction, single data: regular uniprocessing

SIMD: single instruction, multiple data: apply the same instructions

to a whole vector of data in parallel. Also called vector computing.

Most common form of symmetric multiprocessing. Early Cray supercomputers

were mostly SIMD.

MISD: multiple instruction, single data: applying different operations

to the same block of data in parallel. E.g., attempting several decryption

algorithms to the same ciphertext. Not commonly used.

MIMD: multiple instruction, multiple data: several processing nodes,

each with their own data and own tasks. Most general form of parallel

computing.

- What does granularity mean in the context of parallel

programming? What kind of granularity do we generally want, and why?

[4]

Granularity describes the amount of local computation done relative

to the frequency of communication with other threads. In distributed

computing, we generally want coarse granularity, which means a lot of

computation is done locally with very little communication.

E.g., FoldingAtHome, a computer can work on a task for weeks before

reporting back to the server.

- Describe the three parallel programming (API) models we discussed

in class, and name an example API for each. (The fourth programming model we

talked about is hybrids of these three.) [5]

- Threads: master thread forks worker threads; collects results when threads

complete. OpenMP, POSIX Threads.

- Message passing: threads communicate by sending messages to each other.

MPI.

- Data parallelism: each thread performs same work in parallel on a

different chunk of the data. HPF.

- Describe synchronous vs. asynchronous communication

in general and give an every-day example of each

(need not be computer-related). [3]

Synchronous: both sender and receiver are connected at the same time.

Phone call, face-to-face conversation, TCP.

Asynchronous: sending of message and receiving of message might not

happen at the same time. Email, postal mail, voicemail, TV/radio, UDP.

- Now compare the pros/cons of synchronous vs. asynchronous

communication in the context of parallel programming. [3]

Synchronous communication is easier to program, but introduces idle time

while one thread is waiting for another, leading to inefficiencies.

Asynchronous allows threads to continue while waiting for the other

side, but logic is needed to tell when the other side has received the

message.

- Compare the pros/cons of OpenMP vs. MPI. [4]

OpenMP uses the threading model instead of the message-passing model, is easier

to program in and easier to add-on to existing serial code. The programmer

need not know how many processors the program is actually using. It is generally

more well-suited to a shared-memory model. MPI is more complex to program in

but provides more control over synchronization and communication. MPI often

scales up better to more processors; it is more appropriate for

distributed-memory models.

- Describe the (1) shared, (2) distributed, and

(3) hybrid memory models of

parallel computing. Draw the diagrams illustrating how memory and processors

are tied together. What are the advantages/disadvantages of shared memory vs.

distributed memory models? [5]

- Describe Amdahl's law of parallel computing. What is the

take-home message? [4]

Speedup = 1 / ( P/N + (1-P) ), where P is the fraction of code that is

parallelizable, and N is the number of processors.

Take-home message is that the important thing is not just the number

of processors we throw at a problem, but the fraction of code that can

be parallelized efficiently.

- Below is a simple C program to estimate pi by generating random (x,y)

points in the unit square. The fraction of points that lie inside the

unit quarter-circle is an estimate of pi/4. Parallelize this

program with OpenMP (a complete OpenMP program).

Pay attention to shared/private variables, as well as issues of granularity.

Use a separate sheet of paper if necessary. [6]

#include <stdio.h>

#include <stdlib.h>

#include <omp.h>

int main(int argc, char *argv[]) {

double x, y, pi;

int i, count, num_steps;

num_steps = atoi(argv[1]); /* command-line arg */

#pragma omp parallel for private(x,y) schedule(static, 1000)

for (i=0; i < num_steps; i++) {

x = (double) rand()/RAND_MAX;

y = (double) rand()/RAND_MAX;

if (x*x + y*y < 1) count++; /* in unit circle? */

}

pi = 4.0 * count / num_steps;

printf("pi = %32.30f\n", pi);

return 0;

}

(See pi-monte.cpp)

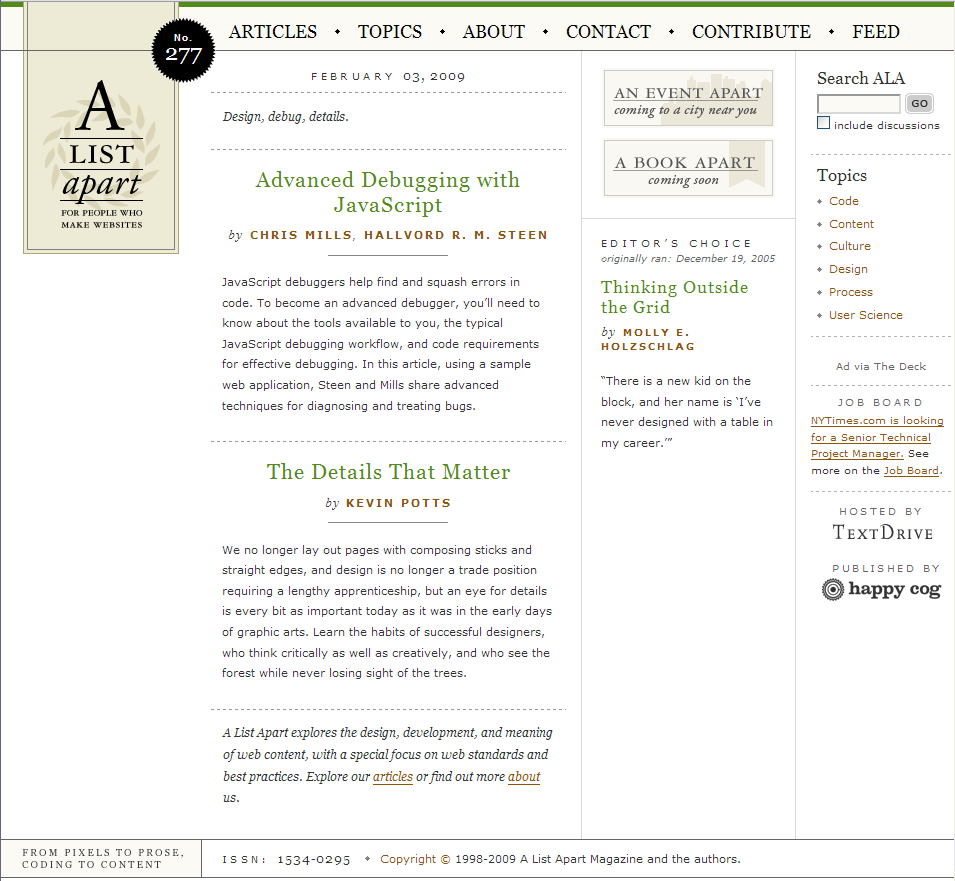

- Attached is a printout of

A List Apart's

frontpage (they are a free online magazine publishing articles on

web design and HTML/CSS/JS techniques).

Critique their user interface design: good points and

bad points. Bear in mind that this is a static printout of a website.

Be as detailed as you can, and cite specific examples. You may wish to

circle items on the printout.

[5]